# Necessary packages from original code

library(lattice)

library(ggplot2)

library(ggmap)

library(lme4)

library(mgcv)

library(R2jags)

library(plyr)

# Recommended by the authors but outdated

# library(maptools) => We will use other packages

# library(sp) => We won't need it with sf

# library(rgdal) => We won't need it with sf

# Using sf

library(sf)

# Other additionnal packages to improve upon the provided code

# We also setup for rgl plots in the webpage

library(lubridate)

library(gganimate)

library(here)

library(leaflet)

library(equatiomatic)

library(knitr)

library(stargazer)

library(sjPlot)

library(rgl) ; knitr::knit_hooks$set(webgl = hook_webgl)

library(htmlwidgets)Zuur & Ieno’s 10 steps for regression analyses

This page is a reproducible exploration of “A protocol for conducting and presenting results of regression-type analyses” by Alain F. Zuur, and Elena N. Ieno.

The 10 steps are all first presented in figure 1 of the paper:

The focus of the 10 steps on on linear modelling of the type GLM, GLMM etc and uses R although it generalizes to other languages.

Accessing data and code for reproducibility

The paper provides data and code on Dryad but is not set up for interactive report-style reproducibility with package versioning. To fix that, I used a quarto document with renv to produce this website. In order to reproduce the analysis here, you can clone the repository in RStudio, install the renv package, run renv::restore() and you should be good to go for running the quarto notebook!

I had to make some changes to the packages used. Let’s load what we will need now. Packages maptools and rgdal are deprecated as far as I can tell, and sp is just not recommendable for forward compatibility in 2024.

In order to access the data and code, we would ideally use a package like rdryad to do so, but I have been getting nowhere with it. It is probably broken as it is soon to be superseded by the deposits package. If I forget to update this website with the latest deposits API, feel free the file an issue.

In the meantime, let’s download the files one by one.

# Create the file urls and destination files names & names

base_dryad_url <- "https://datadryad.org/stash/downloads/file_stream/"

file_url_list <- paste0(base_dryad_url, c(37547:37550))

files_names <- c("monkeys.txt", "owls.txt", "oystercatchers.txt", "zurr_iena_2016.R")

files_paths_list <- c(here("data", "zuur_ieno_2016", files_names[1:3]), here("scripts", files_names[4]))

# If the file exists already, do not download it

ret <- mapply(\(file_url, file_path) {

if (!file.exists(file_path)) download.file(file_url, destfile = file_path)

}, file_url_list, files_paths_list)You can take a look at the code in the scripts directory, we will be copying code from there into this document. Now, let’s load the data properly before we get anything else done.

Owls <- read.table(here("data","zuur_ieno_2016", "owls.txt"),

header = TRUE,

dec = ".")

# SiblingNegotiation is too long....use shorter name:

Owls$NCalls <- Owls$SiblingNegotiation

# Let's look at it

names(Owls) [1] "Nest" "Xcoord" "Ycoord"

[4] "FoodTreatment" "SexParent" "ArrivalTime"

[7] "SiblingNegotiation" "BroodSize" "NegPerChick"

[10] "Date" "Day" "Month"

[13] "NCalls" str(Owls)'data.frame': 599 obs. of 13 variables:

$ Nest : chr "AutavauxTV" "AutavauxTV" "AutavauxTV" "AutavauxTV" ...

$ Xcoord : int 556216 556216 556216 556216 556216 556216 556216 556216 556216 556216 ...

$ Ycoord : int 188756 188756 188756 188756 188756 188756 188756 188756 188756 188756 ...

$ FoodTreatment : chr "Deprived" "Deprived" "Deprived" "Deprived" ...

$ SexParent : chr "Male" "Male" "Male" "Male" ...

$ ArrivalTime : num 22.2 22.5 22.6 22.6 22.6 ...

$ SiblingNegotiation: int 4 2 2 2 2 18 18 3 3 3 ...

$ BroodSize : int 5 5 5 5 5 5 5 5 5 5 ...

$ NegPerChick : num 0.8 0.4 0.4 0.4 0.4 3.6 3.6 0.6 0.6 0.6 ...

$ Date : chr "12/07/97" "12/07/97" "12/07/97" "12/07/97" ...

$ Day : int 12 12 12 12 12 12 12 12 12 12 ...

$ Month : int 7 7 7 7 7 7 7 7 7 7 ...

$ NCalls : int 4 2 2 2 2 18 18 3 3 3 ...head(Owls) Nest Xcoord Ycoord FoodTreatment SexParent ArrivalTime

1 AutavauxTV 556216 188756 Deprived Male 22.25

2 AutavauxTV 556216 188756 Deprived Male 22.53

3 AutavauxTV 556216 188756 Deprived Male 22.56

4 AutavauxTV 556216 188756 Deprived Male 22.61

5 AutavauxTV 556216 188756 Deprived Male 22.65

6 AutavauxTV 556216 188756 Deprived Male 22.76

SiblingNegotiation BroodSize NegPerChick Date Day Month NCalls

1 4 5 0.8 12/07/97 12 7 4

2 2 5 0.4 12/07/97 12 7 2

3 2 5 0.4 12/07/97 12 7 2

4 2 5 0.4 12/07/97 12 7 2

5 2 5 0.4 12/07/97 12 7 2

6 18 5 3.6 12/07/97 12 7 18OC <- read.table(here("data", "zuur_ieno_2016", "oystercatchers.txt"),

header = TRUE,

dec = ".")

# Let's look at it

names(OC)[1] "ShellLength" "Month" "FeedingType" "FeedingPlot"str(OC)'data.frame': 197 obs. of 4 variables:

$ ShellLength: num 1.9 2.16 2.17 2.34 2.2 2.2 1.92 2.11 2.17 2.41 ...

$ Month : chr "Dec" "Dec" "Dec" "Dec" ...

$ FeedingType: chr "Hammerers" "Hammerers" "Stabbers" "Hammerers" ...

$ FeedingPlot: chr "B" "B" "B" "B" ...head(OC) ShellLength Month FeedingType FeedingPlot

1 1.90 Dec Hammerers B

2 2.16 Dec Hammerers B

3 2.17 Dec Stabbers B

4 2.34 Dec Hammerers B

5 2.20 Dec Stabbers B

6 2.20 Dec Hammerers BMonkeys <- read.table(here("data", "zuur_ieno_2016", "monkeys.txt"),

header = TRUE)

# Let's look at it

names(Monkeys) [1] "SubordinateGrooms" "DominantGrooms" "RankDifference"

[4] "Relatedness" "GroomSymmetry" "Time"

[7] "FocalHour" "FocalGroomer" "Receiver"

[10] "GroupSize" str(Monkeys)'data.frame': 1674 obs. of 10 variables:

$ SubordinateGrooms: chr "yes" "yes" "yes" "yes" ...

$ DominantGrooms : chr "no" "yes" "yes" "no" ...

$ RankDifference : num 0.697 0.632 0.169 0.378 0.511 ...

$ Relatedness : num 0.224 0.682 0.707 0.291 0.453 ...

$ GroomSymmetry : int 1 1 0 1 1 1 0 1 1 1 ...

$ Time : num 4.19 5.6 6.16 6.98 2.85 ...

$ FocalHour : int 71 72 72 73 74 75 75 75 75 75 ...

$ FocalGroomer : chr "ade" "vic" "vic" "pre" ...

$ Receiver : chr "ban" "yao" "pre" "nai" ...

$ GroupSize : chr "large" "large" "large" "large" ...head(Monkeys) SubordinateGrooms DominantGrooms RankDifference Relatedness GroomSymmetry

1 yes no 0.6969321 0.2238303 1

2 yes yes 0.6324555 0.6823489 1

3 yes yes 0.1690309 0.7071068 0

4 yes no 0.3779645 0.2913760 1

5 yes yes 0.5107539 0.4529901 1

6 yes yes 0.2948839 0.4539824 1

Time FocalHour FocalGroomer Receiver GroupSize

1 4.186111 71 ade ban large

2 5.601667 72 vic yao large

3 6.164167 72 vic pre large

4 6.976111 73 pre nai large

5 2.850556 74 dys ver small

6 8.605278 75 pox ecz smallStep 1: State appropriate questions

The key idea is to have the salient question of the analysis in mind at the start, and formulate them properly. The example here is a study on the “vocal behavior of barn owl siblings” with a N = 28. The hypothesis is that food availability will influence “sibling negotiation”, proxied by the number of calls in the nest, sampled with microphones. Half the nests get extra food (treatment: satiated) and the other half is starved (treatment: deprived ; surprisingly no control?).

The 3 covariates are time, food treatment (satiated or deprived) and sex of parent. The question is :

- Does the relationship between sibling negotiation and sex of the parent differ with food treatment, and does the effect of time on sibling negotiation differ with food treatment?

Note that the question contains the 3 terms and expected interactions.

The authors warn against breaking down the questions into smaller questions such as “Is there an effect of sex of the parent?”, as “A potential problem with this approach is that the residuals of one model may show patterns when compared with the covariates not used in that model, which invalidates the model assumptions.”

I find this a little surprising because I was taught to build simple models before complex ones, and would naturally work with simpler single covariate models first.

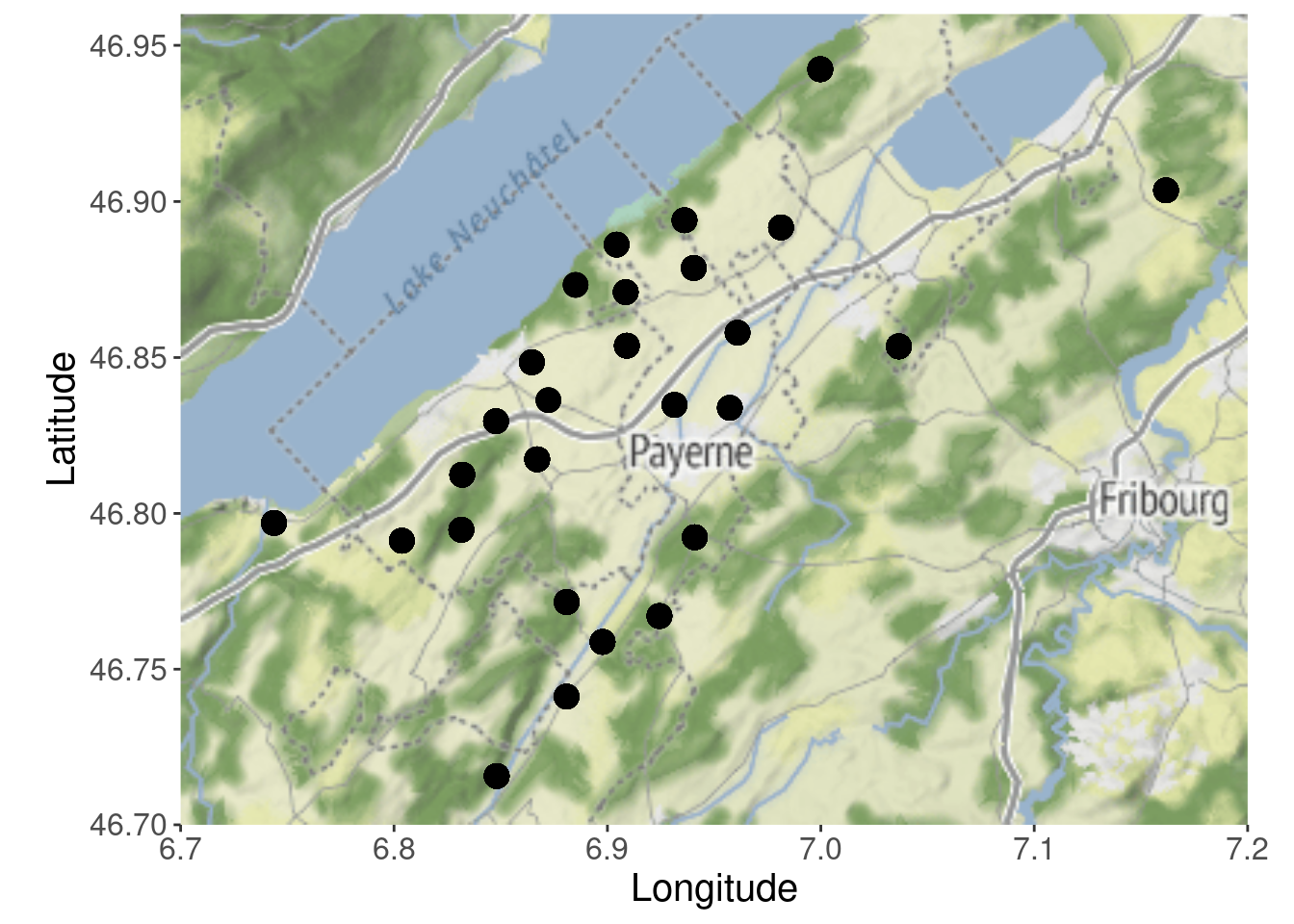

Step 2: Visualize the experimental design

This step is simple yet sometimes overlooked: visualize the sampling protocol and experimental design preferably with the help of a map.

Below we use the sf package to parse the data as spatial data.

# Parse the dataframe as a sf object with the proper projection, and reproject as

# WGS 84 CRS (LAT / LONG)

Owls_sf <- st_as_sf(Owls, coords = c("Xcoord", "Ycoord"))

WGS84 <- st_crs("+proj=longlat +datum=WGS84")

projSWISS <- st_crs("+init=epsg:21781")Warning in CPL_crs_from_input(x): GDAL Message 1: +init=epsg:XXXX syntax is

deprecated. It might return a CRS with a non-EPSG compliant axis order.st_crs(Owls_sf) <- st_crs(projSWISS)

Owls_sf_tmp <- st_transform(Owls_sf, WGS84)

Owls_sf_wgs84 <- cbind(Owls_sf_tmp, st_coordinates(Owls_sf_tmp))

# Let's look at it

head(Owls_sf_wgs84)Simple feature collection with 6 features and 13 fields

Geometry type: POINT

Dimension: XY

Bounding box: xmin: 6.864574 ymin: 46.84849 xmax: 6.864574 ymax: 46.84849

Geodetic CRS: +proj=longlat +datum=WGS84

Nest FoodTreatment SexParent ArrivalTime SiblingNegotiation BroodSize

1 AutavauxTV Deprived Male 22.25 4 5

2 AutavauxTV Deprived Male 22.53 2 5

3 AutavauxTV Deprived Male 22.56 2 5

4 AutavauxTV Deprived Male 22.61 2 5

5 AutavauxTV Deprived Male 22.65 2 5

6 AutavauxTV Deprived Male 22.76 18 5

NegPerChick Date Day Month NCalls X Y

1 0.8 12/07/97 12 7 4 6.864574 46.84849

2 0.4 12/07/97 12 7 2 6.864574 46.84849

3 0.4 12/07/97 12 7 2 6.864574 46.84849

4 0.4 12/07/97 12 7 2 6.864574 46.84849

5 0.4 12/07/97 12 7 2 6.864574 46.84849

6 3.6 12/07/97 12 7 18 6.864574 46.84849

geometry

1 POINT (6.864574 46.84849)

2 POINT (6.864574 46.84849)

3 POINT (6.864574 46.84849)

4 POINT (6.864574 46.84849)

5 POINT (6.864574 46.84849)

6 POINT (6.864574 46.84849)The code suggests to write the points as a .kml file to open in Google Earth.

# Write the points as kml, wich you can open in google earth

owls_kml_file <- here("data", "Owls_wgs84.kml")

if (!file.exists(owls_kml_file)) {

st_write(Owls_sf_wgs84,

owls_kml_file,

driver = "kml", delete_dsn = TRUE)

}The code relied ggmap, which makes an API request to Google maps. Of course, in 2024 we are required to use an API key for that. As an alternative I suggest leaflet which is more likely to work in the future and does not require to mess with API keys.

leaflet(Owls_sf_wgs84) %>%

addTiles() %>% # Add default OpenStreetMap map tiles

addMarkers()For a more publication-oriented map with ggmap I would recommend using the stadiamap API.

# I suppress the API message

glgmap <- suppressMessages(get_stadiamap(c(6.7, 46.70, 7.2, 46.96)))

p_ggmap <- ggmap(glgmap) +

geom_point(aes(X, Y),

data = Owls_sf_wgs84,

size = 4) +

xlab("Longitude") + ylab("Latitude") +

theme(text = element_text(size = 15))

p_ggmap

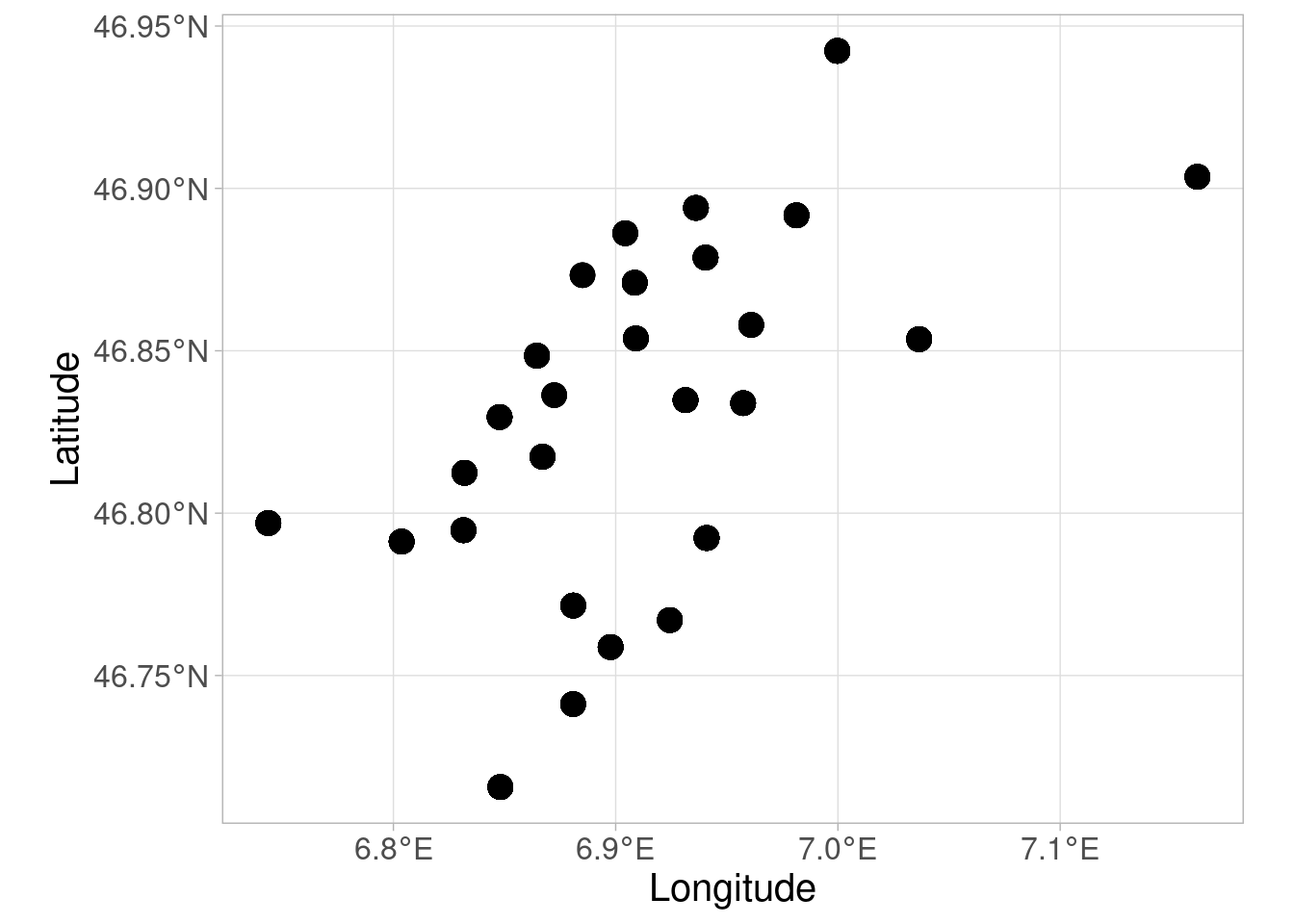

We can also do a simple sf + ggplot plot.

p_simple <- ggplot(Owls_sf_wgs84) +

geom_sf(size = 4) +

xlab("Longitude") + ylab("Latitude") +

theme_light() + theme(text = element_text(size = 15))

p_simple

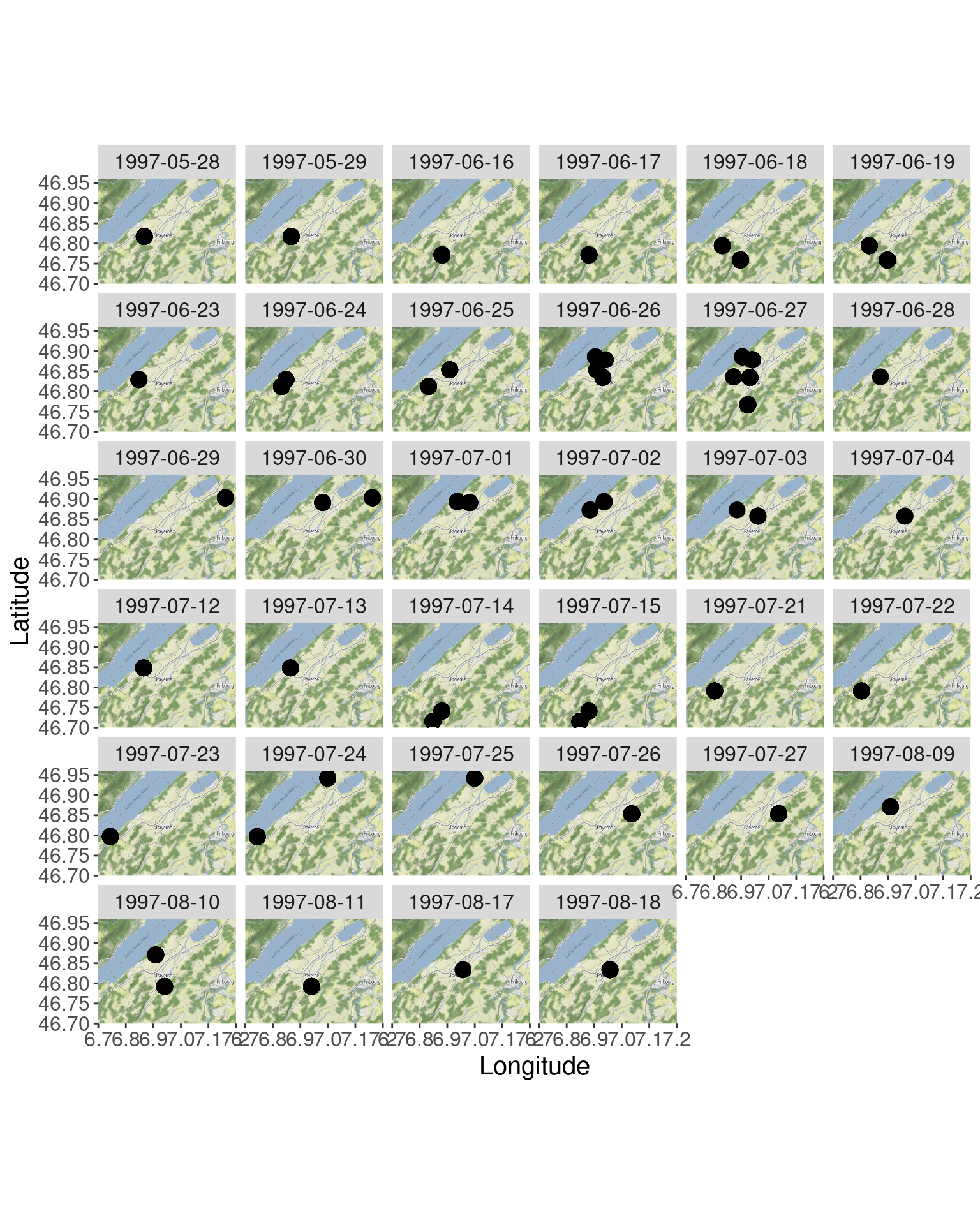

Finally, the code suggest another two plots that are not in the paper, but are useful. The first time is a plot of the time series.

p_series <- ggplot() +

xlab("Arrival time") + ylab("Number of calls") +

theme(text = element_text(size = 15)) + theme_light() +

geom_point(data = Owls_sf_wgs84,

aes(x = ArrivalTime,

y = NCalls,

color = FoodTreatment),

size = 2) +

geom_line(data = Owls_sf_wgs84,

aes(x = ArrivalTime,

y = NCalls,

group = FoodTreatment,

color = FoodTreatment)) +

facet_wrap( ~ Nest, ncol = 4)

p_series

The second shows how sampling unfolds across space and time, but I find the plot a little unwieldy as it facets multiple maps.

# We parse the date column as a proper date

Owls_sf_wgs84$Date_parsed <- as_date(Owls_sf_wgs84$Date, format = "%d/%m/%y")

p_ggmap_facet <- ggmap(glgmap) +

geom_point(aes(X, Y),

data = Owls_sf_wgs84,

size = 4) +

xlab("Longitude") + ylab("Latitude") +

theme(text = element_text(size = 15)) +

facet_wrap(~Date_parsed)

p_ggmap_facet

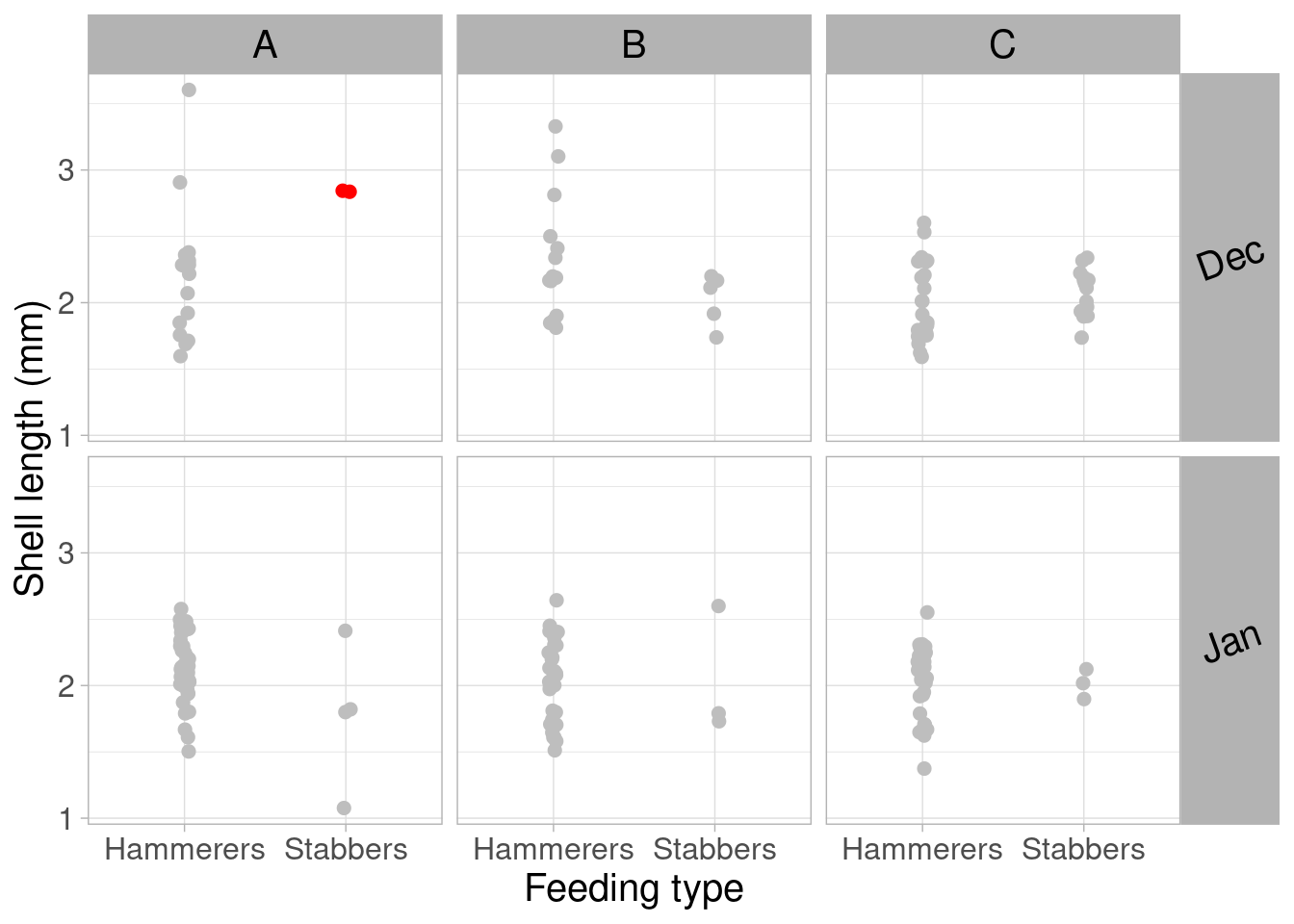

Step 3: Conduct data exploration

There is another even older Zurr et al. paper for the 10 steps of data exploration. The first figure of that paper gives you the gist of the protocol (see the other page on this website to reproduce the paper).

We now move from owls to oystercatchers. The study related the length of clams preyed upon and the feeding behavior of oystercatchers, accross time and space. The authors describe how the design suggests a 3-way interaction term that would in practicality only be driven by a couple of data points. The following plot shows that in location A in December, there were only two observations, both showing the same value. Note that I modified the plot to add colors to those problematic points to make the visualization clearer

# Set a color column

OC$color_pt <- ifelse(OC$Month == "Dec" &

OC$FeedingType == "Stabbers" &

OC$FeedingPlot == "A",

"red", "grey")

# Here is the code for Figure 3

p_OC <- ggplot() + xlab("Feeding type") + ylab("Shell length (mm)") +

geom_point(data = OC,

aes(x = FeedingType, y = ShellLength),

color = OC$color_pt,

position = position_jitter(width = .03),

size = 2) +

facet_grid(Month ~ FeedingPlot,

scales = "fixed") +

theme_light() +

theme(text = element_text(size = 15)) +

theme(legend.position = "none") +

theme(strip.text.y = element_text(size = 15,

colour = "black",

angle = 20),

strip.text.x = element_text(size = 15,

colour = "black",

angle = 0)

)

p_OC

You should spend a good amount of time on data exploration. It will also help you identify data quality issues and encoding errors.

Note that it is sometimes useful to tabulate data across levels of a given factor.

table(OC$Month, OC$FeedingPlot, OC$FeedingType), , = Hammerers

A B C

Dec 17 14 26

Jan 43 31 34

, , = Stabbers

A B C

Dec 2 5 15

Jan 4 3 3Step 4: Identify the dependency structure in the data

The authors warn that it is rare to find a dataset without dependency structures. They advise GLMM as a way to deal with pseudoreplicated data (relying on pooling as a way to properly model dependencies between, say, sampling locations etc..).

We now move on to the baboon dataset. I think it is the same dataset used in Statistical Rethinking for teaching multilevel modelling. The design: the researchers are interested in understanding grooming behavior between baboons. Baboon may hold different ranks within the troop (represented as a value between 0 and 1). Some 60 baboons are sampled multiple times, for an hour at a time, its grooming behavior recorded, making the receiver of the behavior another layer of dependency. This pseudoreplication structure requires a mixed-effects model with random effects (two-way nested AND crossed). The structure looks like this:

The authors suggest to properly report the structure. Personally I love seeing, in papers, when both a graphical, a textual AND a mathematical description is given.

The textual description for baboon and owl data:

This data set consists of multiple observations of rank differences of a given baboon and receivers within a focal hour, along with multiple observations of a given receiver. We therefore applied a mixed-effects model with the random effect focal hour nested within the random effect baboon and a crossed random effect receiver

We sampled each nest multiple times and therefore applied a GLMM in which nest is used as random intercept, as this models a dependency structure among sibling negotiation observations of the same nest

Step 5: Present the statistical model

Speaking of mathematical description, this step is where the authors suggest this is given and stated clearly, under the form of an equation. Here is what it would look like for the owl data:

One of the key element in this is how the error structure of the data is stated clearly: here the count of calls is modelled as a Poisson distribution. It shows the log link function and how the model is clearly defined. The authors give further advice for how to explain choices of distributions.

For the baboon data:

If only all papers when to that length in describing their models (although I am definitely guilty of omitting details as well). The amazing package equatiomatic makes this easier.

# Although we will look at the model formulation at the next step in greater

# details, let's try and use it here with equationmatic

M1 <- glmer(NCalls ~ FoodTreatment + ArrivalTime + SexParent +

FoodTreatment : SexParent +

FoodTreatment : ArrivalTime +

(1 | Nest),

family = poisson,

data = Owls)

extract_eq(M1, wrap = TRUE, terms_per_line = 1)\[ \begin{aligned} \operatorname{NCalls}_{i} &\sim \operatorname{Poisson}(\lambda_i) \\ \log(\lambda_i) &=\alpha_{j[i]}\ + \\ &\quad \beta_{1}(\operatorname{FoodTreatment}_{\operatorname{Satiated}})\ + \\ &\quad \beta_{2}(\operatorname{ArrivalTime})\ + \\ &\quad \beta_{3}(\operatorname{SexParent}_{\operatorname{Male}})\ + \\ &\quad \beta_{4}(\operatorname{FoodTreatment}_{\operatorname{Satiated}} \times \operatorname{SexParent}_{\operatorname{Male}})\ + \\ &\quad \beta_{5}(\operatorname{ArrivalTime} \times \operatorname{FoodTreatment}_{\operatorname{Satiated}}) \\ \alpha_{j} &\sim N \left(\mu_{\alpha_{j}}, \sigma^2_{\alpha_{j}} \right) \text{, for Nest j = 1,} \dots \text{,J} \end{aligned} \]

Step 6: Fit the model

The authors first remind that it is important to say what software was used to fit the model, directly in the text. They add details as to how to report a MCMC analysis. This section of the paper is not very verbose and could have been expanded to talk about how versions of packages can be very relevant to the fitted results. Also, the paper could have discussed how fitted models are stored and conserved (with rds files) and implications of all this kind of stuff for the ability of future readers to make sense of the model.

One note concerning the owl dataset: in the code, the authors mention that the response variable should probably be transformed (but for some reason they do not show the process). They also mention that brood size should probably be used an offset in the model - but did not want to confuse readers of the paper with it, at the risk of confusing readers of the code by not explaining why and how the offset is needed here.

Let’s see the model again (note the additional code for the offset, if you want, but we won’t use it for the sake of consistency with the paper).

#For the offset we need:

Owls$LogBroodSize <- log(Owls$BroodSize)

library(lme4)

M1 <- glmer(NCalls ~ FoodTreatment + ArrivalTime + SexParent +

FoodTreatment : SexParent +

FoodTreatment : ArrivalTime +

#offset(LogBroodSize) + #Feel free to include

(1 | Nest),

family = poisson,

data = Owls)

# Numerical results:

summary(M1)Generalized linear mixed model fit by maximum likelihood (Laplace

Approximation) [glmerMod]

Family: poisson ( log )

Formula:

NCalls ~ FoodTreatment + ArrivalTime + SexParent + FoodTreatment:SexParent +

FoodTreatment:ArrivalTime + (1 | Nest)

Data: Owls

AIC BIC logLik deviance df.resid

5011.4 5042.1 -2498.7 4997.4 592

Scaled residuals:

Min 1Q Median 3Q Max

-3.4559 -1.7563 -0.6413 1.1770 11.1040

Random effects:

Groups Name Variance Std.Dev.

Nest (Intercept) 0.235 0.4847

Number of obs: 599, groups: Nest, 27

Fixed effects:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 5.1698547 0.2926462 17.666 <2e-16 ***

FoodTreatmentSatiated -0.6540263 0.4686680 -1.396 0.1629

ArrivalTime -0.1296994 0.0113051 -11.473 <2e-16 ***

SexParentMale -0.0094526 0.0453669 -0.208 0.8349

FoodTreatmentSatiated:SexParentMale 0.1297493 0.0704391 1.842 0.0655 .

FoodTreatmentSatiated:ArrivalTime -0.0004913 0.0192171 -0.026 0.9796

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Correlation of Fixed Effects:

(Intr) FdTrtS ArrvlT SxPrnM FTS:SP

FdTrtmntStt -0.545

ArrivalTime -0.938 0.570

SexParentMl -0.060 0.055 -0.038

FdTrtmS:SPM 0.033 -0.049 0.024 -0.606

FdTrtmnS:AT 0.539 -0.993 -0.573 0.004 -0.043For the Bayesian baboon models, the code to run the model is given later in the script, but it is more appropriately shown here. You can skip this part if you are not familiar with Bayesian methods as this might be more confusing than anything. The authors re-use an example from the course they teach and only provide the minimal code needed to run the model which makes it difficult to get the full picture. That part of the provided code lacks the model validation section, and provide functions (which I have placed in a separate file for simplicity) about which the authors say the reader should not “bother trying to understand”…). Still, let’s source those functions.

source(here("scripts", "functions.R"))One of the relevant question to the analysis, which is provided as a code comment (instead of being mentioned in step 1, as I think it should have been) is that Subordinates should prefer to groom more dominant animals earlier in the day. To look at this we need to subset the data.

Monkeys_sub <- Monkeys[Monkeys$SubordinateGrooms == "yes",]Then we prepare the model data to run with JAGS.

# Transfor the response variable:

N <- nrow(Monkeys_sub)

Monkeys_sub$RD.scaled <- (Monkeys_sub$RankDifference * (N - 1) + 0.5) / N

# A full explanation of zero inflatedbeta models is provided in

# Zuur's Beginner's Guide to Zero-Inflated Models with R'

# For MCMC it is essential to standardize continuous covariates

Mystd <- function(x) {(x - mean(x)) / sd(x)}

Monkeys_sub$Time.std <- Mystd(Monkeys_sub$Time)

Monkeys_sub$Relatedness.std <- Mystd(Monkeys_sub$Relatedness)

# JAGS coding

# Create X matrix

X <- model.matrix(~Time.std * Relatedness.std + Relatedness.std * GroupSize,

data = Monkeys_sub)

K <- ncol(X)

# Random effects:

reFocalGroomer <- as.numeric(as.factor(Monkeys_sub$FocalGroomer))

NumFocalGroomer <- length(unique(Monkeys_sub$FocalGroomer))

reFocalHour <- as.numeric(as.factor(Monkeys_sub$FocalHour))

NumFocalHour <- length(unique(Monkeys_sub$FocalHour))

reReceiver <- as.numeric(as.factor(Monkeys_sub$Receiver))

NumReceiver <- length(unique(Monkeys_sub$Receiver))

#Put all data for JAGS in a list

JAGS.data <- list(Y = Monkeys_sub$RD.scaled,

N = nrow(Monkeys_sub),

X = X,

K = ncol(X),

reFGroomer = reFocalGroomer,

NumFGroomer = NumFocalGroomer,

reFHour = reFocalHour,

NumFHour = NumFocalHour,

reRec = reReceiver,

NumReceiver = NumReceiver

)

str(JAGS.data)List of 10

$ Y : num [1:1178] 0.697 0.632 0.169 0.378 0.511 ...

$ N : int 1178

$ X : num [1:1178, 1:6] 1 1 1 1 1 1 1 1 1 1 ...

..- attr(*, "dimnames")=List of 2

.. ..$ : chr [1:1178] "1" "2" "3" "4" ...

.. ..$ : chr [1:6] "(Intercept)" "Time.std" "Relatedness.std" "GroupSizesmall" ...

..- attr(*, "assign")= int [1:6] 0 1 2 3 4 5

..- attr(*, "contrasts")=List of 1

.. ..$ GroupSize: chr "contr.treatment"

$ K : int 6

$ reFGroomer : num [1:1178] 2 58 58 47 13 46 46 46 46 30 ...

$ NumFGroomer: int 59

$ reFHour : num [1:1178] 1 2 2 3 4 5 5 5 5 6 ...

$ NumFHour : int 573

$ reRec : num [1:1178] 5 59 47 41 57 14 14 56 49 57 ...

$ NumReceiver: int 59We then run the chains. I do not run the updates as they take too much time on my laptop, hence why the results might not be matching perfectly the reported numbers in the paper.

# JAGS code

jags_file_path <- here("jags", "BetaRegmm.txt")

if (!file.exists(jags_file_path)) {

sink(jags_file_path)

cat("

model{

#Priors regression parameters, theta

for (i in 1:K) { beta[i] ~ dnorm(0, 0.0001) }

theta ~ dunif(0, 20)

#Priors random effects

for (i in 1: NumFGroomer) {a[i] ~ dnorm(0, tau.gr) }

for (i in 1: NumFHour) {b[i] ~ dnorm(0, tau.fh) }

for (i in 1: NumReceiver) {c[i] ~ dnorm(0, tau.rc) }

#Priors for the variances of the random effects

tau.gr <- 1 / (sigma.gr * sigma.gr)

tau.fh <- 1 / (sigma.fh * sigma.fh)

tau.rc <- 1 / (sigma.rc * sigma.rc)

sigma.gr ~ dunif(0, 10)

sigma.fh ~ dunif(0, 10)

sigma.rc ~ dunif(0, 10)

#######################

#Likelihood

for (i in 1:N){

Y[i] ~ dbeta(shape1[i], shape2[i])

shape1[i] <- theta * pi[i]

shape2[i] <- theta * (1 - pi[i])

logit(pi[i]) <- eta[i] + a[reFGroomer[i]] + b[reFHour[i]] + c[reRec[i]]

eta[i] <- inprod(beta[], X[i,])

}

}

", fill = TRUE)

sink()

}

# Set the initial values for the betas and sigma

inits <- function() {

list(

beta = rnorm(ncol(X), 0, 0.1),

theta = runif(0, 20),

sigma.gr = runif(0, 10),

sigma.fh = runif(0, 10),

sigma.rc = runif(0, 10)

) }

#Parameters to estimate

params <- c("beta", "theta",

"sigma.gr", "sigma.fh", "sigma.rc")

model_path <- here("jags", "J0.rds")

if (!file.exists(model_path)) {

J0 <- jags(data = JAGS.data,

inits = inits,

parameters = params,

model.file = jags_file_path,

n.thin = 10,

n.chains = 3,

n.burnin = 4000,

n.iter = 5000)

saveRDS(J0, model_path)

} else {

J0 <- readRDS(model_path)

}

# Fast computer:

# J1 <- update(J0, n.iter = 10000, n.thin = 10)

# J2 <- update(J1, n.iter = 50000, n.thin = 10)

# out <- J2$BUGSoutput

out <- J0$BUGSoutput

str(out)List of 24

$ n.chains : int 3

$ n.iter : num 5000

$ n.burnin : num 4000

$ n.thin : num 10

$ n.keep : int 100

$ n.sims : int 300

$ sims.array : num [1:100, 1:3, 1:11] 0.298 0.327 0.282 0.217 0.246 ...

..- attr(*, "dimnames")=List of 3

.. ..$ : NULL

.. ..$ : NULL

.. ..$ : chr [1:11] "beta[1]" "beta[2]" "beta[3]" "beta[4]" ...

$ sims.list :List of 6

..$ beta : num [1:300, 1:6] 0.2293 0.2488 0.3311 0.0713 0.1399 ...

..$ deviance: num [1:300, 1] -1461 -1610 -1597 -1588 -1573 ...

..$ sigma.fh: num [1:300, 1] 0.197 0.325 0.34 0.264 0.281 ...

..$ sigma.gr: num [1:300, 1] 0.491 0.594 0.496 0.461 0.594 ...

..$ sigma.rc: num [1:300, 1] 0.585 0.603 0.553 0.545 0.484 ...

..$ theta : num [1:300, 1] 11.8 12.4 12.9 12.7 13.3 ...

$ sims.matrix : num [1:300, 1:11] 0.2293 0.2488 0.3311 0.0713 0.1399 ...

..- attr(*, "dimnames")=List of 2

.. ..$ : NULL

.. ..$ : chr [1:11] "beta[1]" "beta[2]" "beta[3]" "beta[4]" ...

$ summary : num [1:11, 1:9] 0.33 -0.0503 -0.0394 -0.2878 0.0413 ...

..- attr(*, "dimnames")=List of 2

.. ..$ : chr [1:11] "beta[1]" "beta[2]" "beta[3]" "beta[4]" ...

.. ..$ : chr [1:9] "mean" "sd" "2.5%" "25%" ...

$ mean :List of 6

..$ beta : num [1:6(1d)] 0.33 -0.0503 -0.0394 -0.2878 0.0413 ...

..$ deviance: num [1(1d)] -1623

..$ sigma.fh: num [1(1d)] 0.326

..$ sigma.gr: num [1(1d)] 0.551

..$ sigma.rc: num [1(1d)] 0.542

..$ theta : num [1(1d)] 13.3

$ sd :List of 6

..$ beta : num [1:6(1d)] 0.1565 0.0224 0.0425 0.2546 0.0216 ...

..$ deviance: num [1(1d)] 59.3

..$ sigma.fh: num [1(1d)] 0.0422

..$ sigma.gr: num [1(1d)] 0.0714

..$ sigma.rc: num [1(1d)] 0.0618

..$ theta : num [1(1d)] 0.877

$ median :List of 6

..$ beta : num [1:6(1d)] 0.3129 -0.0499 -0.0399 -0.2867 0.0391 ...

..$ deviance: num [1(1d)] -1625

..$ sigma.fh: num [1(1d)] 0.332

..$ sigma.gr: num [1(1d)] 0.546

..$ sigma.rc: num [1(1d)] 0.539

..$ theta : num [1(1d)] 13.2

$ root.short : chr [1:6] "beta" "deviance" "sigma.fh" "sigma.gr" ...

$ long.short :List of 6

..$ : int [1:6] 1 2 3 4 5 6

..$ : int 7

..$ : int 8

..$ : int 9

..$ : int 10

..$ : int 11

$ dimension.short: num [1:6] 1 0 0 0 0 0

$ indexes.short :List of 6

..$ :List of 6

.. ..$ : num 1

.. ..$ : num 2

.. ..$ : num 3

.. ..$ : num 4

.. ..$ : num 5

.. ..$ : num 6

..$ : NULL

..$ : NULL

..$ : NULL

..$ : NULL

..$ : NULL

$ last.values :List of 3

..$ :List of 5

.. ..$ beta : num [1:6(1d)] 0.3831 -0.0217 0.0398 -0.3688 0.0303 ...

.. ..$ deviance: num [1(1d)] -1560

.. ..$ sigma.fh: num [1(1d)] 0.288

.. ..$ sigma.gr: num [1(1d)] 0.546

.. ..$ sigma.rc: num [1(1d)] 0.517

..$ :List of 5

.. ..$ beta : num [1:6(1d)] 0.04858 -0.06041 -0.00364 -0.02162 0.02057 ...

.. ..$ deviance: num [1(1d)] -1507

.. ..$ sigma.fh: num [1(1d)] 0.26

.. ..$ sigma.gr: num [1(1d)] 0.541

.. ..$ sigma.rc: num [1(1d)] 0.406

..$ :List of 5

.. ..$ beta : num [1:6(1d)] 0.1042 -0.041 -0.101 -0.2195 0.0534 ...

.. ..$ deviance: num [1(1d)] -1573

.. ..$ sigma.fh: num [1(1d)] 0.303

.. ..$ sigma.gr: num [1(1d)] 0.537

.. ..$ sigma.rc: num [1(1d)] 0.571

$ program : chr "jags"

$ model.file : chr "/home/vlucet/Documents/WILDLab/repos/ZuurIeno10steps/jags/BetaRegmm.txt"

$ isDIC : logi TRUE

$ DICbyR : logi TRUE

$ pD : num 1695

$ DIC : num 71.9

- attr(*, "class")= chr "bugs"We process the results of the Bayesian model.

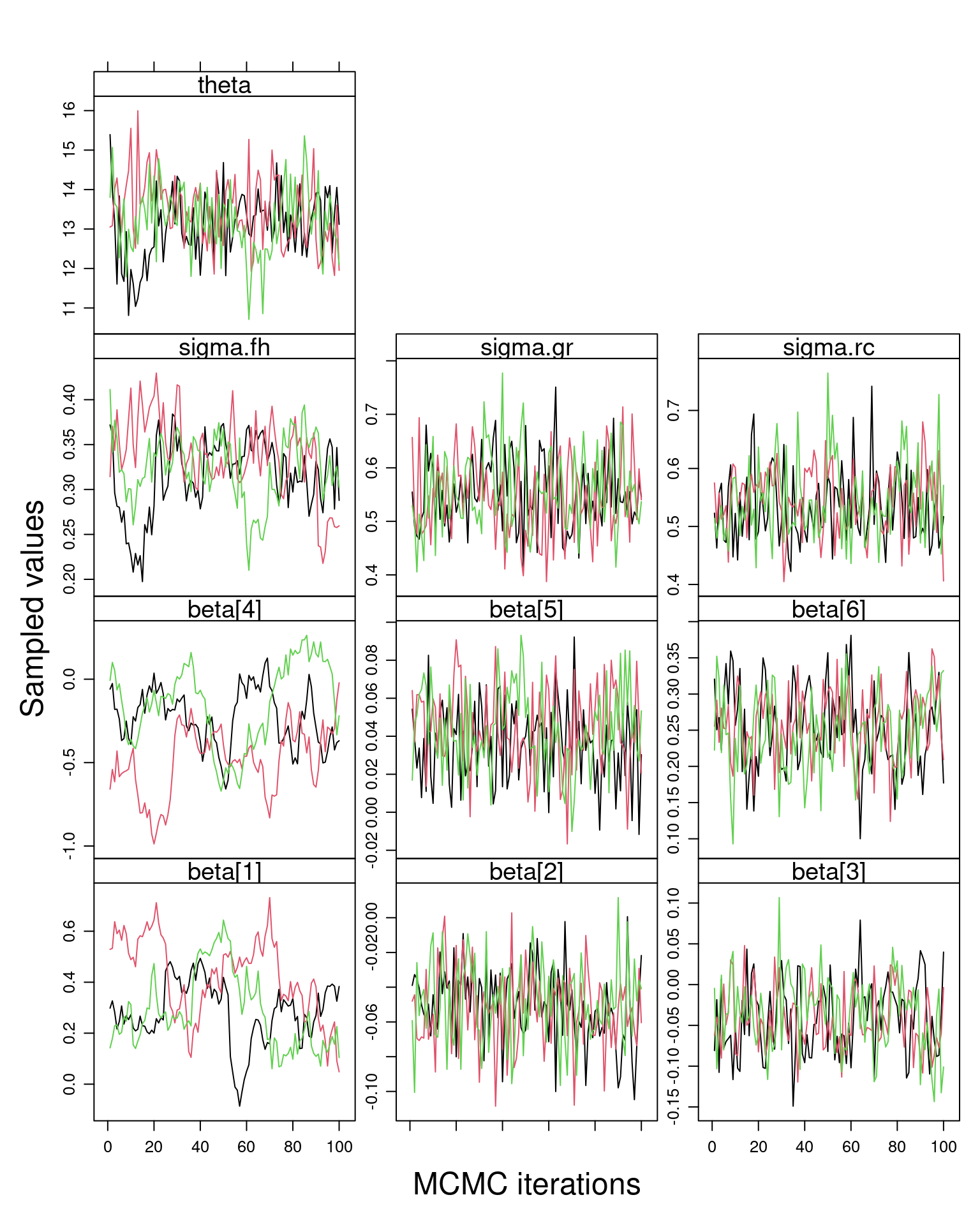

# Assess chain mixing (an indication of how well the model ran)

MyBUGSChains(out,

c(uNames("beta", K), "theta", "sigma.gr", "sigma.fh", "sigma.rc"))

Step 7: Validate the model

What is validation? “Model validation confirms that the model complies with underlying assumptions.”

Regression models have assumptions (in particular about the structure of residuals) and the fore violation of those assumptions may lead to increase bias, type 1 error rate, etc. Residuals should be plotted against all variables (and time and space dimensions if applicable) and autocorellation should be modeled if applicable.

To get to the residuals and fitted values:

E1 <- resid(M1, type = "pearson")

F1 <- fitted(M1)

str(E1) Named num [1:599] -1.19 -1.87 -1.87 -1.85 -1.85 ...

- attr(*, "names")= chr [1:599] "1" "2" "3" "4" ...str(F1) Named num [1:599] 7.18 6.93 6.9 6.85 6.82 ...

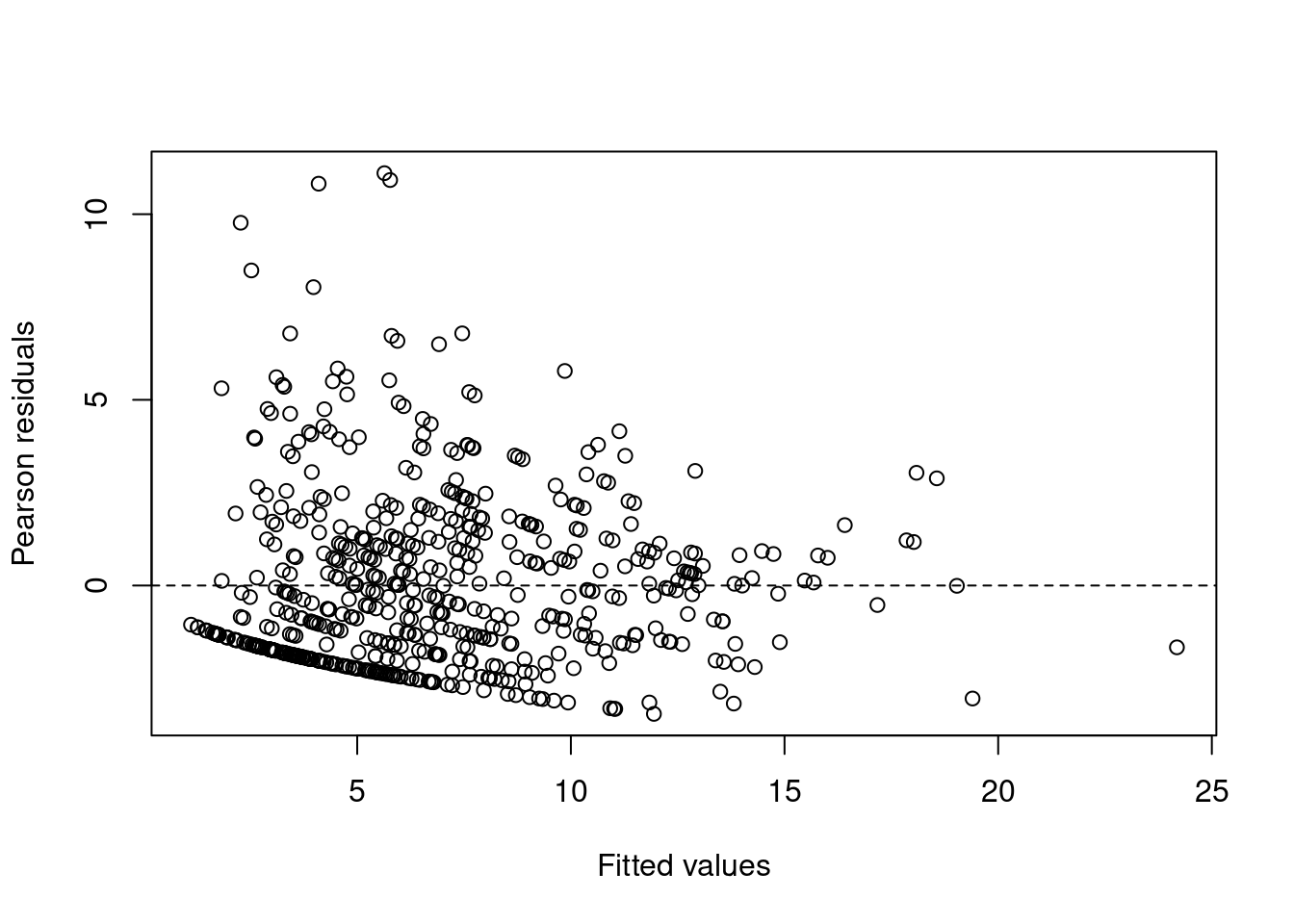

- attr(*, "names")= chr [1:599] "1" "2" "3" "4" ...In the context of the owl data, the authors describe finding a pattern of over dispersion by calculating an over dispersion metric:

# Get the dispersion statistic

# (not counting random effects as parameters)

E1 <- resid(M1, type = "pearson")

N <- nrow(Owls)

p <- length(fixef(M1)) + 1

sum(E1^2) / (N - p)[1] 5.439501Not in the paper, they also flag a potential issue with heterogeneity…

plot(x = F1,

y = E1,

xlab = "Fitted values",

ylab = "Pearson residuals")

abline(h = 0, lty = 2)

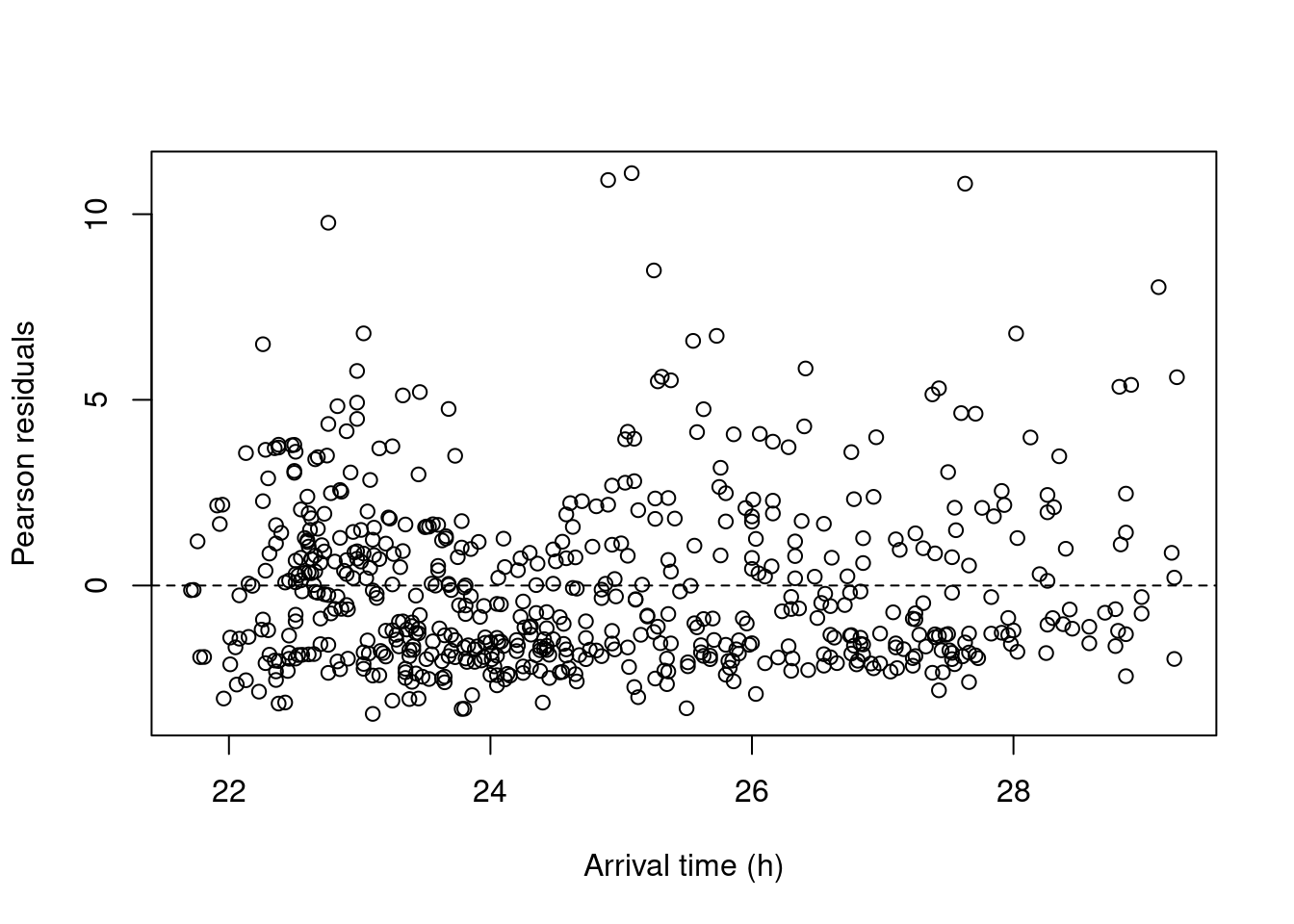

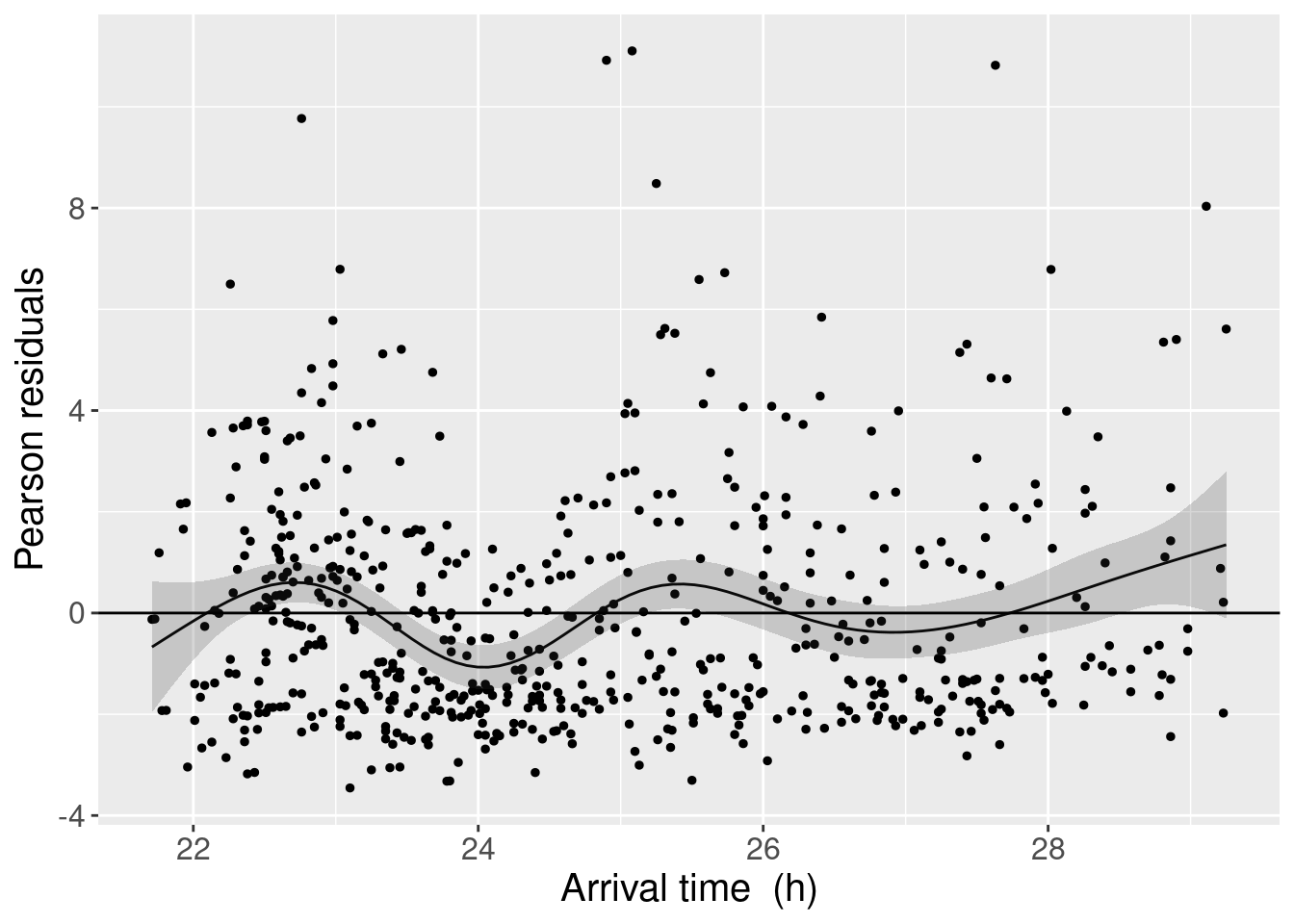

Building up to the next figure in the paper, we plot arrival time against those residuals.

plot(x = Owls$ArrivalTime,

y = E1,

xlab = "Arrival time (h)",

ylab = "Pearson residuals")

abline(h = 0, lty = 2)

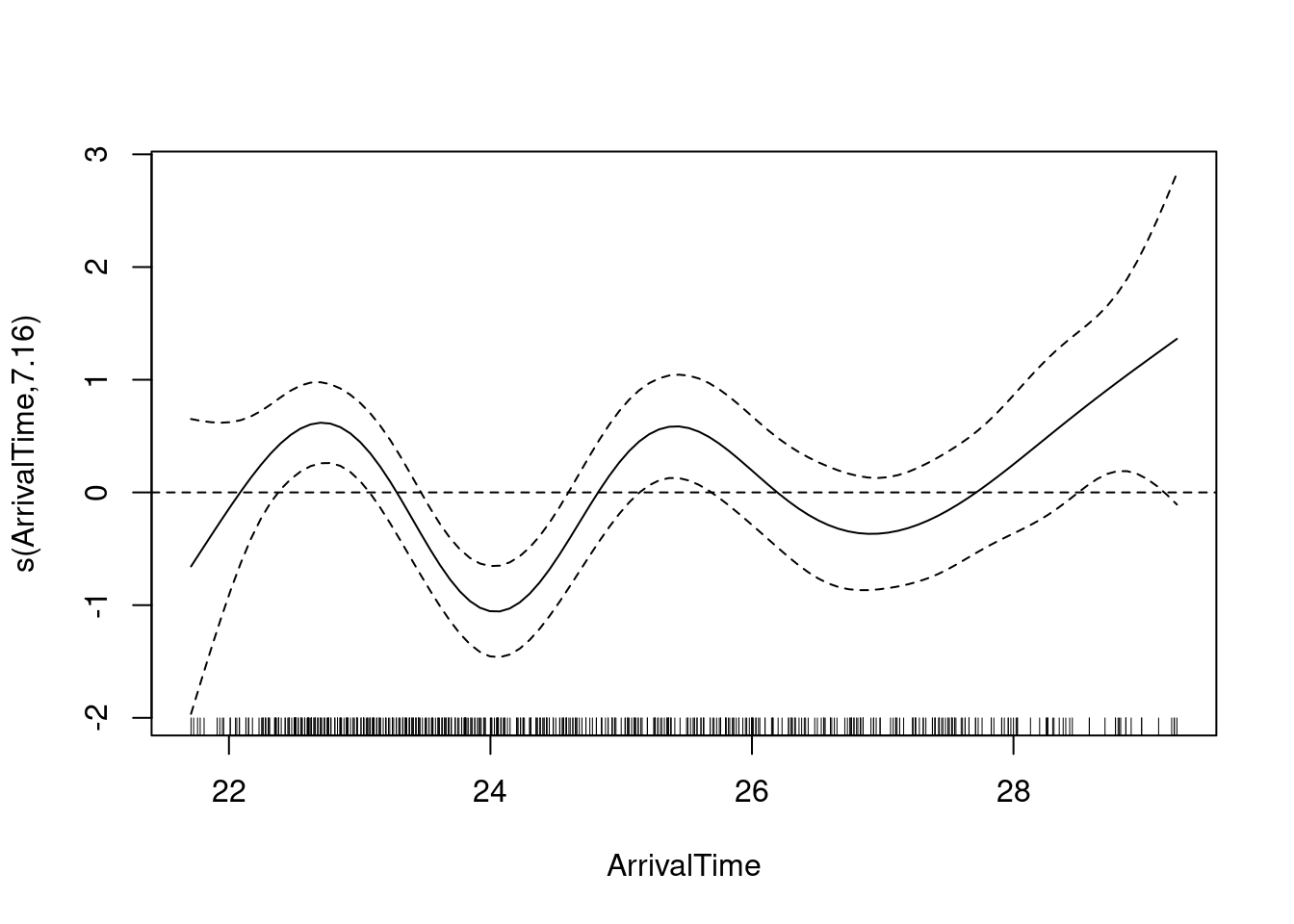

Suspecting a non-linear structure, the authors decide to fit a GAM on the residuals. As they write in the code “If the smoother is not significant, or if it explains a small amount of variation, then there are indeed no residual patterns.”

T2 <- gam(E1 ~ s(ArrivalTime), data = Owls)

summary(T2)

Family: gaussian

Link function: identity

Formula:

E1 ~ s(ArrivalTime)

Parametric coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -0.01448 0.09185 -0.158 0.875

Approximate significance of smooth terms:

edf Ref.df F p-value

s(ArrivalTime) 7.159 8.206 5.011 4.88e-06 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

R-sq.(adj) = 0.0615 Deviance explained = 7.27%

GCV = 5.1235 Scale est. = 5.0537 n = 599plot(T2)

abline(h = 0, lty = 2)

Now, we can reproduce the next figure in the paper. The authors used a plotting technique for the predictions which consists in creating a grid of arrival times to feed to the predict function. To this we add the smoother and its confidence interval, all of it using ggplot2.

time_range <- range(Owls$ArrivalTime)

MyData <- data.frame(ArrivalTime = seq(time_range[1], time_range[2],

length = 100))

P <- predict(T2, newdata = MyData, se = TRUE)

MyData$mu <- P$fit

MyData$SeUp <- P$fit + 1.96 * P$se

MyData$SeLo <- P$fit - 1.96 * P$se

Owls$E1 <- E1

p_M1 <- ggplot() +

xlab("Arrival time (h)") + ylab("Pearson residuals") +

theme(text = element_text(size = 15)) +

geom_point(data = Owls,

aes(x = ArrivalTime, y = E1),

size = 1) +

geom_line(data = MyData,

aes(x = ArrivalTime, y = mu),

colour = "black") +

geom_ribbon(data = MyData,

aes(x = ArrivalTime,

ymax = SeUp,

ymin = SeLo ),

alpha = 0.2) +

geom_hline(yintercept = 0)

p_M1

From this exploration of the residuals, the authors conclude that we should be using a GAMM instead…

A couple of notes: the authors mention in a figure caption that the smooth only explains 7% of the variation in the residuals, which as I understand it (maybe badly), is quite low. They also mention other factors that could be responsible for overdispersion: “a missing covariate, the relatively large number of zero observations (25%) or dependency within (or between) nests”. The pattern with arrival time seems quite clear, and I am left convinced it should be dealt with in the model. I am not left convinced that a complex GAMM would be the first answer, however.

Validation for the baboon bayesian model is not present in the code.

Step 8: Interpret and present the numerical output of the model

Interpreting complex regression results in R can be complicated, especially when it comes to interaction terms and how they change intercepts and slopes. The authors suggest to report the main results in a table: “estimated parameters, standard errors, t-values, R2 and the estimated variance”. For mixed effects models: report multiple variances, calculate intraclass correlation to derive the R2. For GAMs/GAMMs, make sure to report the effective df of smoothers.

The authors report the controversies around reporting p-values. They say: “Their interpretation is prone to abuse, and, for most of the frequentist techniques mentioned, P-values are approximate at best. They must be interpreted with care, and this should be emphasized in the paper. An alternative is to present 95% confidence intervals for the regression parameters and effect size estimates and their precision”.

Concerning the owl model, writing out the actual effects might help. Here I insert the next equation in the paper:

The authors simply report the Fixed effects table from summary(M1).

M1_sum <- summary(M1)

M1_table <- M1_sum$coefficients |> as.data.frame()

print(M1_table) Estimate Std. Error z value

(Intercept) 5.1698547275 0.29264624 17.66588463

FoodTreatmentSatiated -0.6540262583 0.46866801 -1.39550010

ArrivalTime -0.1296994152 0.01130508 -11.47266482

SexParentMale -0.0094525874 0.04536692 -0.20835861

FoodTreatmentSatiated:SexParentMale 0.1297492849 0.07043915 1.84200531

FoodTreatmentSatiated:ArrivalTime -0.0004913068 0.01921705 -0.02556619

Pr(>|z|)

(Intercept) 7.679934e-70

FoodTreatmentSatiated 1.628651e-01

ArrivalTime 1.809982e-30

SexParentMale 8.349490e-01

FoodTreatmentSatiated:SexParentMale 6.547437e-02

FoodTreatmentSatiated:ArrivalTime 9.796034e-01In reports, these table came be outputted to a markdown table and nicely formatted.

kable(M1_table)| Estimate | Std. Error | z value | Pr(>|z|) | |

|---|---|---|---|---|

| (Intercept) | 5.1698547 | 0.2926462 | 17.6658846 | 0.0000000 |

| FoodTreatmentSatiated | -0.6540263 | 0.4686680 | -1.3955001 | 0.1628651 |

| ArrivalTime | -0.1296994 | 0.0113051 | -11.4726648 | 0.0000000 |

| SexParentMale | -0.0094526 | 0.0453669 | -0.2083586 | 0.8349490 |

| FoodTreatmentSatiated:SexParentMale | 0.1297493 | 0.0704391 | 1.8420053 | 0.0654744 |

| FoodTreatmentSatiated:ArrivalTime | -0.0004913 | 0.0192171 | -0.0255662 | 0.9796034 |

Alternatively, one can use a package designed to deal with tables specifically, such as gtable (for structured tables with tidy data), or more appropriately here, stargazer (to compare models) or sjPlot.

# Here we use the html for this report, and note the results='asis' code chunck

# options, but stargazer has other output format options

stargazer(M1, type = "html", flip = TRUE)| Dependent variable: | |

| NCalls | |

| FoodTreatmentSatiated | -0.654 |

| (0.469) | |

| ArrivalTime | -0.130*** |

| (0.011) | |

| SexParentMale | -0.009 |

| (0.045) | |

| FoodTreatmentSatiated:SexParentMale | 0.130* |

| (0.070) | |

| FoodTreatmentSatiated:ArrivalTime | -0.0005 |

| (0.019) | |

| Constant | 5.170*** |

| (0.293) | |

| Observations | 599 |

| Log Likelihood | -2,498.690 |

| Akaike Inf. Crit. | 5,011.381 |

| Bayesian Inf. Crit. | 5,042.147 |

| Note: | p<0.1; p<0.05; p<0.01 |

tab_model(M1)| NCalls | |||

|---|---|---|---|

| Predictors | Incidence Rate Ratios | CI | p |

| (Intercept) | 175.89 | 99.11 – 312.13 | <0.001 |

| FoodTreatment [Satiated] | 0.52 | 0.21 – 1.30 | 0.163 |

| ArrivalTime | 0.88 | 0.86 – 0.90 | <0.001 |

| SexParent [Male] | 0.99 | 0.91 – 1.08 | 0.835 |

| FoodTreatment [Satiated] × SexParent [Male] |

1.14 | 0.99 – 1.31 | 0.065 |

| FoodTreatment [Satiated] × ArrivalTime |

1.00 | 0.96 – 1.04 | 0.980 |

| Random Effects | |||

| σ2 | 0.16 | ||

| τ00 Nest | 0.23 | ||

| ICC | 0.59 | ||

| N Nest | 27 | ||

| Observations | 599 | ||

| Marginal R2 / Conditional R2 | 0.267 / 0.699 | ||

The values in the last equation are produced by expanding the correct values from the table into the formula. Note that here, “the overdispersion and nonlinear pattern in the Pearson residuals invalidate the results”.

For MCMC models, the same is applicable. Here is what the equation would look like for a model like the baboon data example (for large groups, as for small groups there need to be a correction, see the paper for the resulting equation):

The table for this model is treated similarly with kable.

# present output

OUT1 <- MyBUGSOutput(out,

c(uNames("beta", K), "sigma.gr", "sigma.fh", "sigma.rc", "theta"))

rownames(OUT1)[1:K] <- colnames(X)

print(OUT1, digits = 5) mean se 2.5% 97.5%

(Intercept) 0.330026 0.156454 0.05525310 0.6221030

Time.std -0.050283 0.022368 -0.09657115 -0.0080435

Relatedness.std -0.039357 0.042529 -0.11658754 0.0402774

GroupSizesmall -0.287839 0.254589 -0.81645269 0.1611966

Time.std:Relatedness.std 0.041279 0.021623 0.00014808 0.0831566

Relatedness.std:GroupSizesmall 0.252929 0.053244 0.14178199 0.3517761

sigma.gr 0.551376 0.071424 0.42725554 0.6907920

sigma.fh 0.326122 0.042196 0.22980833 0.4011921

sigma.rc 0.541729 0.061770 0.43822784 0.6811514

theta 13.253933 0.876666 11.65854514 15.0072451kable(OUT1)| mean | se | 2.5% | 97.5% | |

|---|---|---|---|---|

| (Intercept) | 0.3300258 | 0.1564539 | 0.0552531 | 0.6221030 |

| Time.std | -0.0502832 | 0.0223680 | -0.0965711 | -0.0080435 |

| Relatedness.std | -0.0393575 | 0.0425290 | -0.1165875 | 0.0402774 |

| GroupSizesmall | -0.2878393 | 0.2545893 | -0.8164527 | 0.1611966 |

| Time.std:Relatedness.std | 0.0412791 | 0.0216227 | 0.0001481 | 0.0831566 |

| Relatedness.std:GroupSizesmall | 0.2529292 | 0.0532444 | 0.1417820 | 0.3517761 |

| sigma.gr | 0.5513762 | 0.0714242 | 0.4272555 | 0.6907920 |

| sigma.fh | 0.3261219 | 0.0421959 | 0.2298083 | 0.4011921 |

| sigma.rc | 0.5417293 | 0.0617704 | 0.4382278 | 0.6811514 |

| theta | 13.2539334 | 0.8766656 | 11.6585451 | 15.0072451 |

I am not aware of any packages for pretty printing of JAGS model output tables.

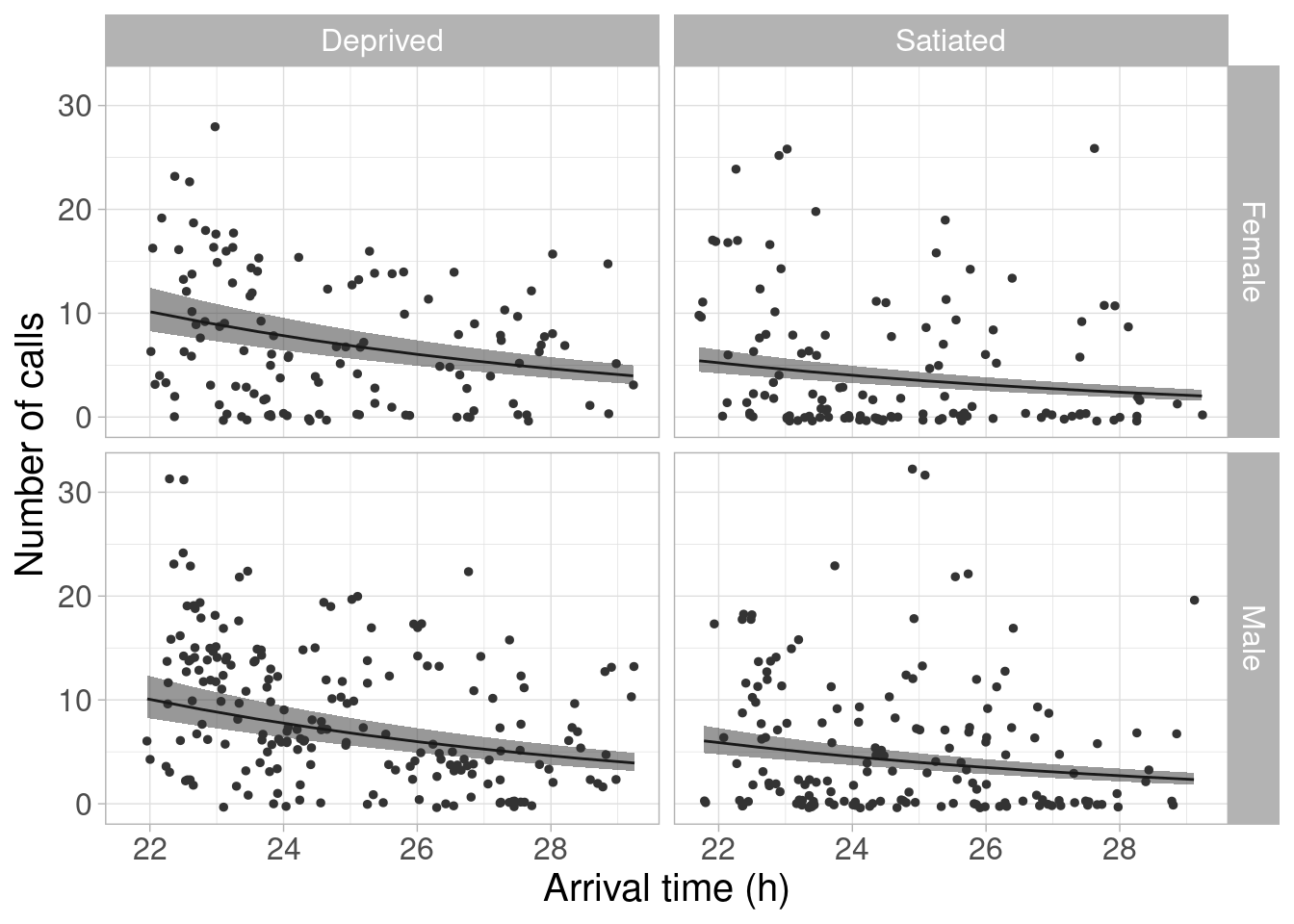

Step 9: Create a visual representation of the model

This is usually the main “results” figure: a visual plotting of the model in action. One should strive to avoid plotting redundant information while still showing the fitted values and the model variance.

Again, the authors remind owl GLMM is “overdispersed, and the residuals contained nonlinear patterns”, which could be improved with a GAMM with nonlinear time arrival effect (then, why not show that, instead of reminding us many times in the paper and the code how bad the analysis actually is). But let’s imagine the GLMM was sufficient and reproduce the next figure in the pap?er. The comments in the original code are quite useful here. I just modified the code to remove a warning.

# A. Specify covariate values for predictions

MyData <- ddply(Owls,

.(SexParent, FoodTreatment),

summarize,

ArrivalTime = seq(from = min(ArrivalTime),

to = max(ArrivalTime),

length = 10))

# B. Create X matrix with expand.grid

X <- model.matrix(~ FoodTreatment + ArrivalTime + SexParent +

FoodTreatment:SexParent +

FoodTreatment:ArrivalTime,

data = MyData)

# C. Calculate predicted values

MyData$eta <- X %*% fixef(M1)

MyData$mu <- exp(MyData$eta)

# D. Calculate standard errors (SE) for predicted values

# SE of fitted values are given by the square root of

# the diagonal elements of: X * cov(betas) * t(X)

MyData$SE <- sqrt(diag(X %*% vcov(M1) %*% t(X)))

MyData$seup <- exp(MyData$eta + 1.96 * MyData$SE)

MyData$selo <- exp(MyData$eta - 1.96 * MyData$SE)

# E. Plot predicted values +/- 2* SE

p_fitted <- ggplot() +

xlab("Arrival time (h)") + ylab("Number of calls") +

geom_point(data = Owls,

aes(x = ArrivalTime, y = NCalls),

position = position_jitter(width = .01),

color = grey(0.2),

size = 1) +

geom_line(data = MyData,

aes(x = ArrivalTime,

y = mu)) +

geom_ribbon(data = MyData,

aes(x = ArrivalTime,

ymax = seup,

ymin = selo),

alpha = 0.5) +

facet_grid(SexParent ~ FoodTreatment,

scales = "fixed") +

theme_light() + theme(text = element_text(size = 15)) +

theme(legend.position = "none")

p_fitted

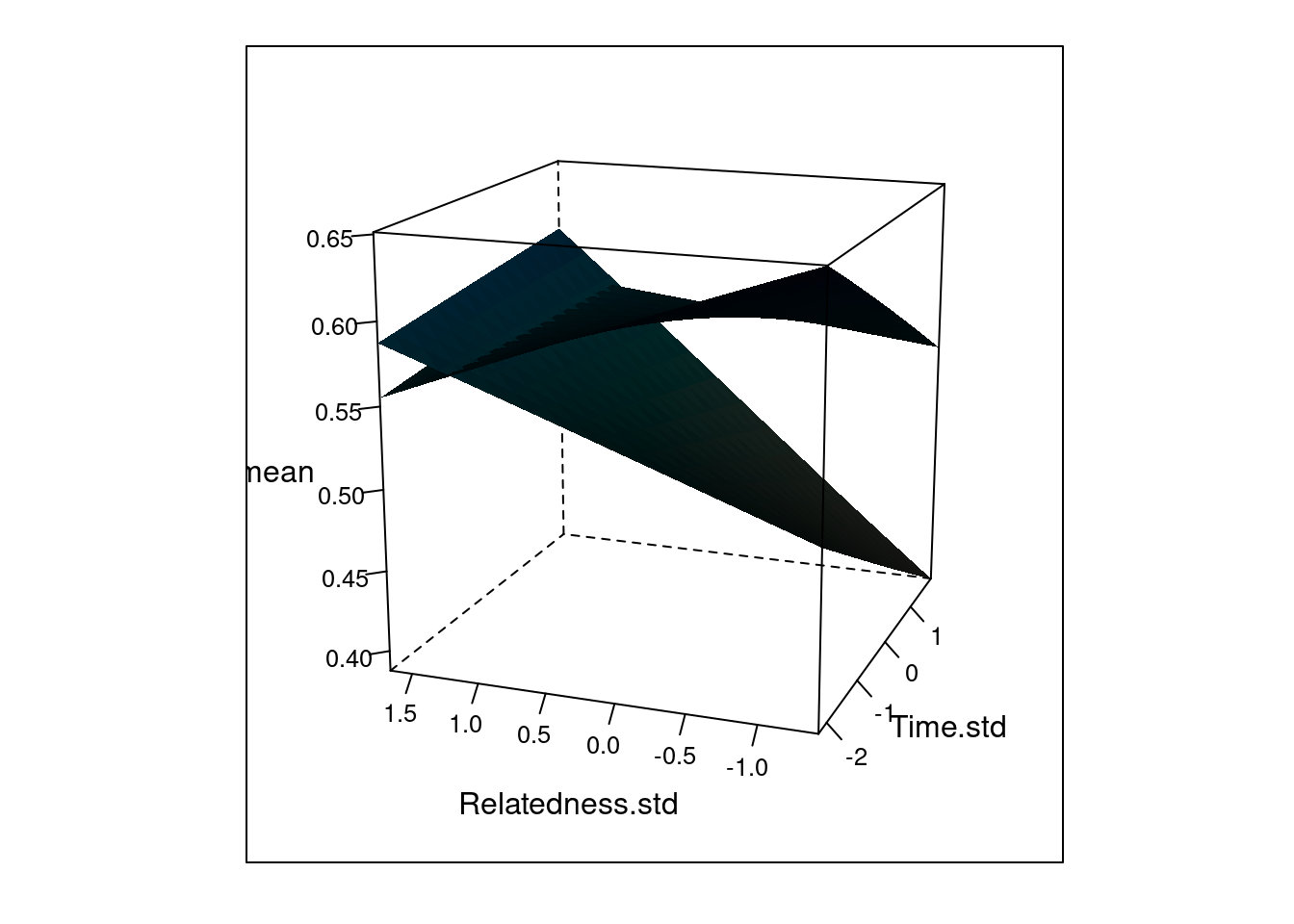

For the baboon model, the authors recommends to plot the model fit in a way that highlights the interactions between the continuous and categorical covariate, as well as between the two continuous covariates. I prefer the paste the entire quote that justifies the 3D plot:

- The figure “shows two planes, one representing the small group and for the other representing the large group. The small group exhibited a positive effect of relatedness on rank differences early in the day and a negative effect later in the day, as well as a negative time effect for low relatedness values and a positive time effect for higher relatedness values. The large group shows a negative relatedness effect early in the day”.

The code was written before R 4.0 and the breaking change concerning characters no longer being read as factors when loading the data, so we need to change another couple of things to get the expected behavior as well. Let’s get the predictions ready.

#Get the realisations of the betas

Beta.mcmc <- out$sims.list$beta

# Create a grid of covariate values

time_range <- range(Monkeys_sub$Time.std)

relate_range <- range(Monkeys_sub$Relatedness.std)

MyData <-

expand.grid(Time.std = seq(time_range[1], time_range[2], length = 15),

Relatedness.std = seq(relate_range[1], relate_range[2], length = 15),

GroupSize = levels(as.factor(Monkeys_sub$GroupSize)))

# Convert the grid into a X matrix, and calculate the fitted

# values for each (!) MCMC iteration

X <- model.matrix(~Time.std * Relatedness.std +

Relatedness.std * GroupSize,

data = MyData)

Betas <- Beta.mcmc

eta <- X %*% t(Betas)

mu <- exp(eta) / (1 + exp(eta))

L <- MyLinesStuff(mu)

str(L) #Posterior mean, se and 95% CI for each of these covariate values num [1:450, 1:4] 0.651 0.644 0.637 0.63 0.622 ...

- attr(*, "dimnames")=List of 2

..$ : NULL

..$ : chr [1:4] "mean" "se" "2.5%" "97.5%"#Glue the results in L to the MyData object

MyData <- cbind(MyData,L)

str(MyData)'data.frame': 450 obs. of 7 variables:

$ Time.std : num -2.25 -1.96 -1.66 -1.37 -1.07 ...

$ Relatedness.std: num -1.41 -1.41 -1.41 -1.41 -1.41 ...

$ GroupSize : Factor w/ 2 levels "large","small": 1 1 1 1 1 1 1 1 1 1 ...

$ mean : num 0.651 0.644 0.637 0.63 0.622 ...

$ se : num 0.0425 0.0417 0.041 0.0403 0.0397 ...

$ 2.5% : num 0.575 0.57 0.564 0.559 0.552 ...

$ 97.5% : num 0.73 0.72 0.711 0.702 0.693 ...The code to make figure 8 is not like in the paper.

# In the paper we use a slighly different angle. Change

# the i value, or put a loop around this code in which i

# runs form 1 to 1000.

i <- 70

p_3D <- wireframe(mean ~ Time.std + Relatedness.std,

data = MyData,

group = GroupSize,

#zlim = c(0,1),

shade = TRUE,

scales = list(arrows = FALSE),

drape = TRUE,

colorkey = FALSE,

screen = list(z = i, x = -60 - i /5))

print(p_3D)

So let’s try and use a better solution. Both rgl and plotly are great for 3D plotting. Let’s give rgl a try.

plot3d(

y = MyData$Relatedness.std,

z = MyData$mean,

x = MyData$Time.std,

# col = data$color,

type = 's',

radius = .1,

ylab = "Relatedness std", zlab = "Mean", xlab = "Time.std"

)

# saveWidget(rglwidget(width = 520, height = 520),

# file = here("widgets", "rgl_plot.html"),

# libdir = "libs",

# selfcontained = FALSE

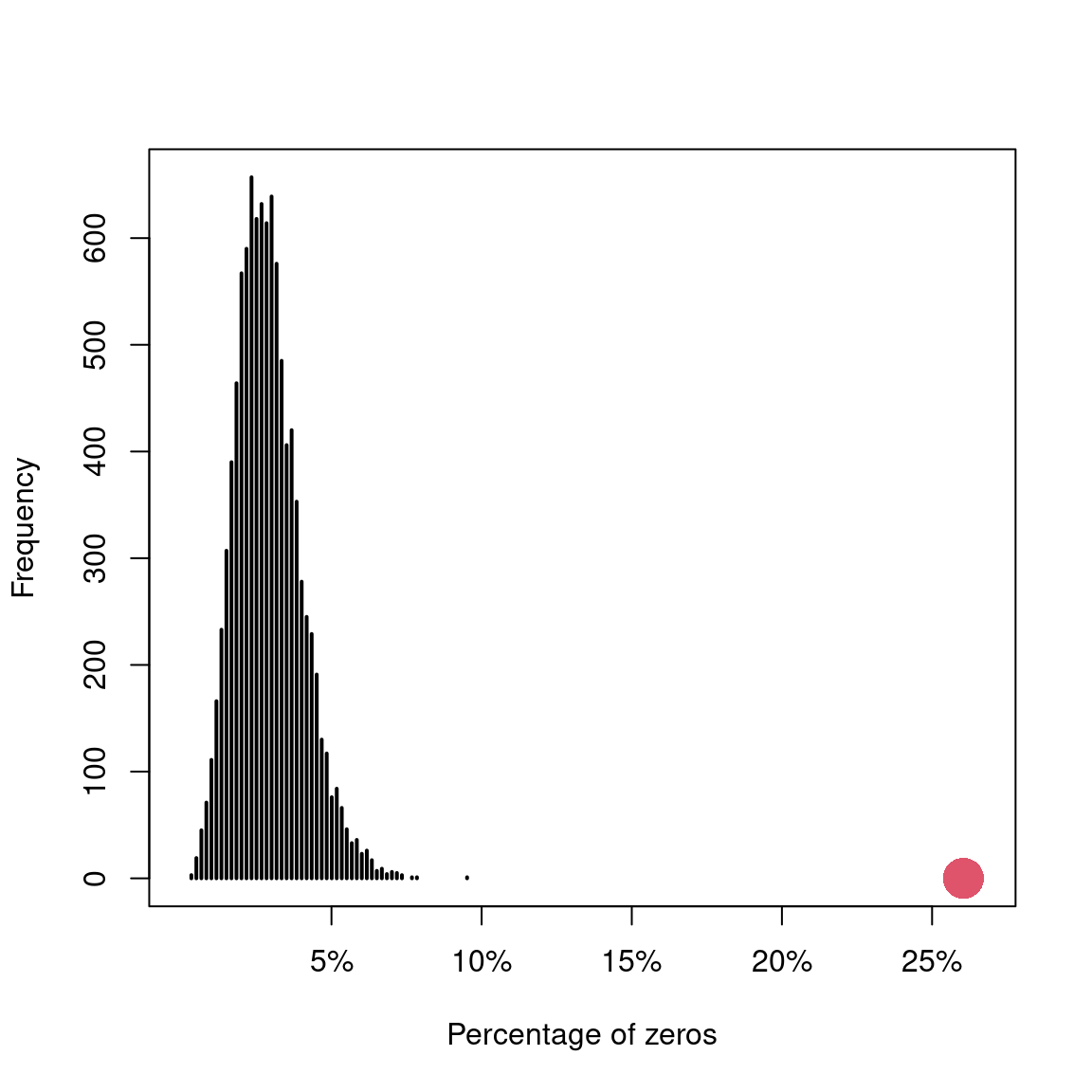

# )Step 10: Simulate from the model

In this section the authors simulate from the model and show that the model underestimates the amount of 0s.

N <- nrow(Owls)

gg <- simulate(M1, 10000)

zeros <- vector(length = 10000)

for (i in 1:10000) {

zeros[i] <- sum(gg[,i] == 0) / N

}

plot(table(zeros),

xlim = c(0, 160 / N),

axes = FALSE,

xlab = "Percentage of zeros",

ylab = "Frequency")

axis(2)

axis(1, at = c(0.05, .10, 0.15, 0.20, 0.25),

labels = c("5%", "10%", "15%", "20%", "25%"))

points(x = sum(Owls$NCalls == 0) / N, y = 0, pch = 16, cex = 3, col = 2)

They also mention the use of cross-validation and counterfactuals (“what ifs”).

I found that simulation could have been introduced earlier: simulating specific pattern in the data to make sure our understanding of the model is accurate (a la statistical rethinking).